Understanding content validity: Examples and FAQ

Skills assessments are a valuable method for evaluating candidates' skills, traits, and abilities prior to making hiring decisions. However, it's important to ensure that the tools you use are precise, accurate, and valid.

Test validity is a complex concept that encompasses different areas like criterion validity, construct validity, and content validity. Content validity is a type of validity that helps you determine whether your tests adequately cover the relevant knowledge and skills you aim to assess.

In this article, we define content validity, discuss evidence of content validity, explore how TestGorilla ensures the content validity of its tests, and explain the importance of using valid tests in your hiring process.

Table of contents

What is content validity?

Content validity is the extent to which a test comprehensively measures the specific skill, ability, personality trait, or work preference it's designed to measure. Good content validity helps you to be confident that your tests accurately represent all relevant aspects of the subject matter they intend to measure.

Imagine you’re creating a test to evaluate someone’s math ability. To truly assess their knowledge, the test should cover various topics like arithmetic, geometry, and algebra. If the test only includes arithmetic questions, it would lack content validity because it doesn’t represent the full range of math skills the person should be able to demonstrate. A test with strong content validity would include questions from all of these areas, providing a well-rounded evaluation of the individual’s math capabilities.

Evidence of content validity: Domain specification, expert judgment, pilot testing

To confirm whether a test has strong content validity, we look for evidence of content validity. Three ways you can do this are: Domain specification, expert judgment, and pilot testing.

1. Domain specification

Domain specification refers to the process of clearly defining the test’s construct – the abstract concept or quality that the test aims to measure, such as math ability or leadership. This involves identifying the specific knowledge, skills, and abilities that a test is intended to assess.

Imagine that you are creating a syllabus for a course. To ensure your students learn everything they need to know, you would first outline all the topics and skills that your course should cover. This comprehensive list of topics and skills represents the content domain for your course.

Similarly, in the context of testing, the content domain refers to the entire range of knowledge, skills, behaviors, or attributes that a test is designed to evaluate. It represents everything that is relevant to the construct being measured. As mentioned earlier, a good math test will cover a variety of skills and abilities, rather than focusing solely on areas like algebra or geometry.

Domain specification is essential because it ensures that a test fully aligns with the construct, making the test a comprehensive and accurate tool for evaluations and decision-making.

2. Expert judgment

Expert judgment involves engaging subject matter experts (SMEs) in the development and evaluation of your test. These experts ensure that each item on the test is relevant to the subject, clear, and truly representative of the construct being measured.

Imagine you’re tasked with developing a certification exam for a highly specialized field, such as cybersecurity. While you may research to gain a basic understanding of the subject, consulting with SMEs who have deep, hands-on experience in these areas is crucial. Their expertise ensures that each test item is not only relevant and clear but also accurately reflects the depth and breadth of the knowledge and skills required in the field.

Involving SMEs is essential because their insights help ensure that the test is not just a collection of random questions but a carefully curated tool that covers all critical aspects of the content. This approach enhances the content validity of the test, making it a more effective and trustworthy instrument for assessing what matters.

3. Pilot testing

Pilot testing is a critical step in validating a test before it is widely administered. The test is given to a small, representative group to collect data on its performance and gather feedback. This helps identify any issue with the test items, such as unclear wording, inappropriate difficulty levels, or gaps in content coverage.

Once the data is gathered, the results are carefully analyzed to determine whether the test items function as intended. Additionally, SMEs can do an additional review of the test items to check for any content gaps. This ensures that the test fully aligns with the content domain it is supposed to measure.

Pilot testing is essential because it allows you to refine the test based on real-world data and expert insights. This process helps ensure that the test is comprehensive, clear, and accurate, enhancing its content validity and making it better at assessing the intended knowledge and skills.

Threats to content validity

Decades of research in test development have made it clear that validity is the most important consideration when creating or evaluating different tests.

Threats to content validity can compromise a test’s effectiveness, leading to inaccurate results. That’s why it’s crucial to understand these threats and their potential impact on talent assessments. See the table below for a breakdown of threats to content validity, with an explanation and example for each.

Threat | Explanation | Example |

Inadequate definition of the construct | Occurs when the constructs are not clearly defined before the test is developed, making it difficult to ensure the test comprehensively measures the construct. | A test for “customer service skills” that only includes technical problem-solving questions, missing communication and empathy, thus failing to assess true customer service abilities. |

Content irrelevance (Contamination) | When items on the test measure something outside of the intended construct. | An Accounting test that includes questions about general office politics contaminates the content with irrelevant information. |

Item bias or misalignment | Occurs when items are culturally biased, too difficult, or too easy, leading to misrepresentation of the construct. | A verbal reasoning test that includes items that require the candidates to have specific cultural knowledge, like slang or highly technical words and phrases. |

Incomplete item sampling | Occurs when the test includes too few items to cover the entire domain of the construct adequately. | A Spanish language test that assesses vocabulary but not grammar and reading comprehension would be incomplete. |

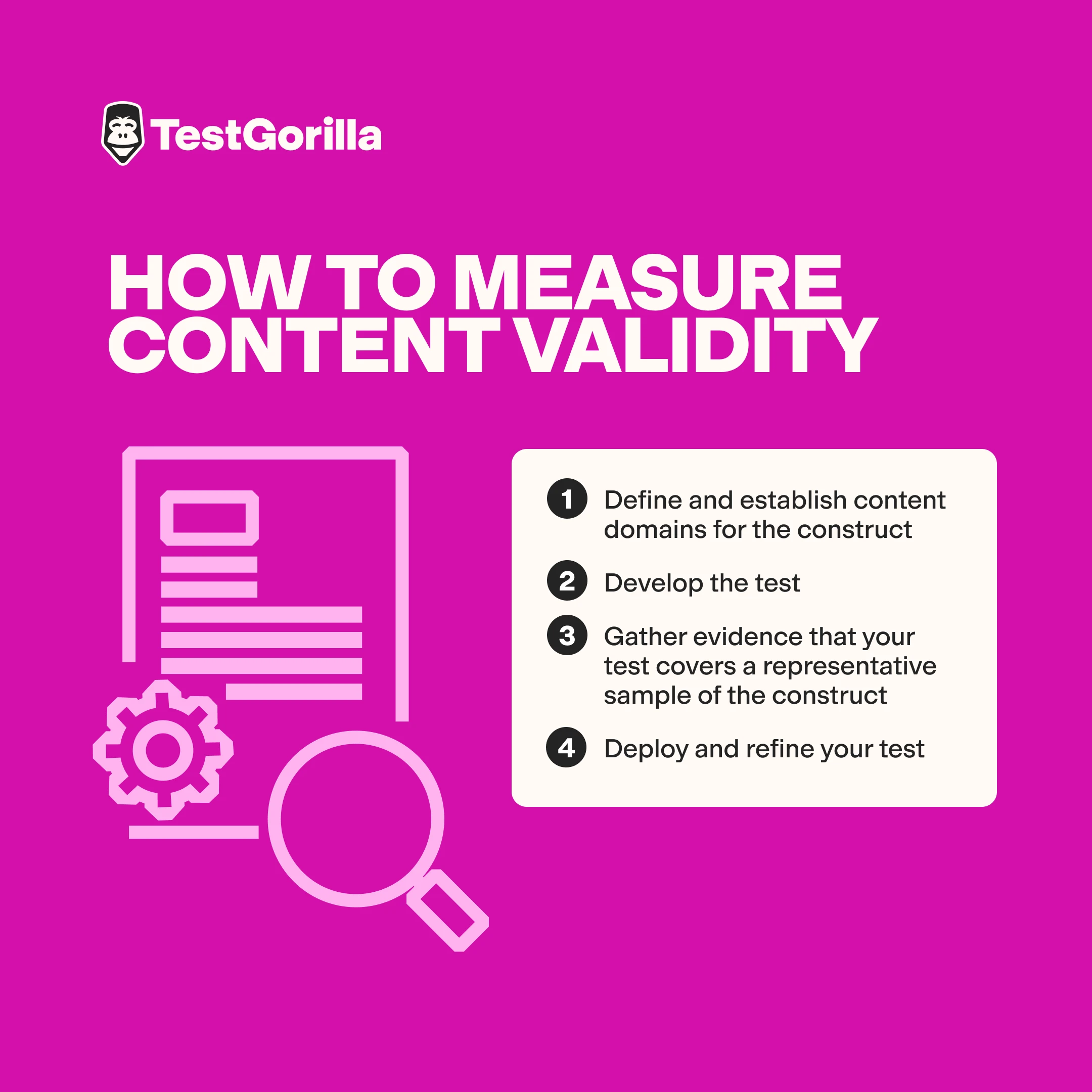

How to measure content validity

Measuring content validity is a process of ensuring your test accurately reflects the construct it aims to measure. This involves defining the content domains, carefully developing test items, and gathering evidence to show that the test covers a representative sample of the construct. After creating the test, ongoing refinement based on feedback and data is crucial for maintaining validity. In the following sections, we'll explore each of these steps in more detail.

1. Define and establish content domains for the construct

To ensure your test accurately measures the intended construct, start by defining and establishing the content domains it should cover. This helps avoid ambiguity and guarantees that your test focuses on all relevant aspects of the construct.

Here’s how to define and establish content domains:

Consult subject matter experts: Discuss with content experts to ensure your understanding of the construct is comprehensive and accurate.

Review existing research: Explore validated tests and literature to understand how similar constructs are defined and measured.

Identify content domains: Break down the construct into specific areas or domains that the test should cover.

Align with objectives: Ensure the identified domains align with your organization’s specific needs and goals.

2. Develop the test

Once you’ve defined the content domains, the next step is to develop a test that covers these areas comprehensively and systematically.

Here’s how to develop your test:

Create a test blueprint: Design a blueprint that outlines the content domains and specifies how many items should be included for each domain.

Develop test items: Create test questions that are aligned with the blueprint and cover the identified content domains. Make sure there are items of different difficulty in each of the content domains.

3. Gather evidence that your test covers a representative sample of the construct

After developing the test, you should gather evidence that it represents a comprehensive sample of the construct. This helps ensure that the test items reflect all relevant aspects of the content domains.

Here’s how to gather the evidence:

Have SMEs review the test: Ask subject matter experts to evaluate the test for relevance and coverage.

Assess SMEs’ agreement: Have your SMEs discuss and come to an agreement to determine whether each test item is essential and representative of the content domain and use Content Validity Ratios (CVRs) to quantify this.

4. Deploy and refine your test

Once the test is developed and validated, regular monitoring and refinement are necessary to maintain its quality and relevance over time.

Here’s how to refine your test:

Monitor to ensure content stays relevant: Regularly review the test to ensure it reflects current knowledge and practices within the content domains.

Refine test items: Use feedback and ongoing data analysis to update and improve test items.

Remove or revise questions: Eliminate or modify any questions that no longer accurately represent the content domain.

Repeat the process: Consistently monitor, refine, and document changes to keep the test valid and effective.

By following these steps, you can ensure that your test has strong content validity, making it a strong tool for accurately measuring the intended construct.

How do we ensure content validity at TestGorilla?

At TestGorilla, we ensure the content validity of our tests through a thorough, multistage development and review process, involving multiple subject matter experts (SMEs) in both the design and item writing phases. Here's a quick look at how we do it:

Our assessment development team collaborates closely with SMEs to create and validate the structure of each test and its questions.

Once the test content is developed, it is reviewed independently by another SME to check for accuracy and technical precision. Our assessment specialists and SMEs work together to ensure the test adheres to industry best practices and remains valid, reliable, fair, and inclusive.

After a test is published, it enters an ongoing monitoring and evaluation process. We continuously review our tests to keep their content up-to-date as the subject matter evolves.

This multistage approach gives us confidence that every test in our library accurately covers the content needed to assess individuals with varying levels of expertise in the test's subject area.

The test descriptions in our library are designed to provide you with an overview of each test’s content, helping you determine if it’s suitable for a specific role. We include a summary of the test, the skills it covers, and examples of roles where the test might be applicable in the hiring process. Additionally, we offer a few preview questions so you can see how they are presented to candidates and how responses are evaluated.

TestGorilla’s team of Intellectual Property Development Specialists, psychometricians, and data scientists also meticulously evaluate and report test validity evidence in test fact sheets.

Avoid bad hires with valid skills tests

Establishing content validity and reliability of your skills tests helps avoid bad hires and biased recruitment processes.

The best approach is to search for credible talent discovery platforms like TestGorilla, which offer hundreds of tests with high validity. We do all the heavy lifting of designing, testing, refining, and optimizing tests.

All you have to do is sign up for a free forever plan and start using our talent assessments to hire better candidates.

Content validity FAQs

In this section, you can find answers to the most common questions about content validity.

How do you determine if a test has strong content validity?

Content validity is the extent to which a test adequately covers the entire domain of the construct it is intended to measure. To achieve this, start by conducting a thorough job analysis or domain specification to define the knowledge, skills, and abilities the test should assess. Next, involve subject matter experts (SMEs) within your organization who have expertise in a given area to take the test and review the test items. Their insights will help confirm that the test items accurately reflect the defined content domains. This approach strengthens the content validity of your test.

What is content validity vs. construct validity?

Content validity evaluates whether a test comprehensively covers the entire concept, ensuring that test items accurately represent the construct in all its dimensions. In contrast, construct validity measures how well a test reflects the theoretical concept it aims to. While you might wonder whether content validity or construct validity is more important, both are essential for creating effective tests.

What is an example of good content validity?

A good example of content validity is a skills-based test for project management that covers key areas like planning, resource allocation, and risk management. If subject matter experts review the test and confirm that the items reflect essential skills outlined for project management, it demonstrates strong content validity. Additionally, if the test avoids unrelated content, it further confirms that the test comprehensively covers the subject.

Science series materials are brought to you by TestGorilla’s team of assessment experts: A group of IO psychology, data science, psychometricians, and IP development specialists with a deep understanding of the science behind skills-based hiring.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.

Latest posts

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Skills tests to hire the best

Our screening tests identify the best candidates and make your hiring decisions faster, easier, and bias-free.

Free resources

A step-by-step blueprint that will help you maximize the benefits of skills-based hiring from faster time-to-hire to improved employee retention.

With our onboarding email templates, you'll reduce first-day jitters, boost confidence, and create a seamless experience for your new hires.

This handbook provides actionable insights, use cases, data, and tools to help you implement skills-based hiring for optimal success

A comprehensive guide packed with detailed strategies, timelines, and best practices — to help you build a seamless onboarding plan.

This in-depth guide includes tools, metrics, and a step-by-step plan for tracking and boosting your recruitment ROI.

Get all the essentials of HR in one place! This cheat sheet covers KPIs, roles, talent acquisition, compliance, performance management, and more to boost your HR expertise.

Onboarding employees can be a challenge. This checklist provides detailed best practices broken down by days, weeks, and months after joining.

Track all the critical calculations that contribute to your recruitment process and find out how to optimize them with this cheat sheet.